Week 1 Quiz Answer

- The weather prediction task.

- The probability of it correctly predicting a future date’s weather.

- The process of the algorithm examining a large amount of historical weather data.

- None of these.

Centigrade/Fahrenheit). Would you treat this as a classification or a regression problem?

- Regression

- Classification

bought/sold) each day. You would like to predict the number of Microsoft shares that will be traded tomorrow.

- Regression

- Classification

unsupervised learning algorithm. Which of the following would you apply supervised learning to? (Select all that apply.) In each

case, assume some appropriate dataset is available for your algorithm to learn from.

- Given genetic (DNA) data from a person, predict the odds of him/her developing diabetes over the next 10 years.

- Have a computer examine an audio clip of a piece of music, and classify whether or not there are vocals (i.e., a human voice

singing) in that audio clip, or if itis a clip of only musical instruments (and no vocals).

- Given data on how 1000 medical patients respond to an experimental drug (such as effectiveness of the treatment, side effects,

etc.), discover whether there are different categories or “types” of patients in terms of how they respond to the drug, and if

so what these categories are.

- Given a large dataset of medical records from patients suffering from heart disease, try to learn whether there might be different

clusters of such patients for which we might tailor separate treatments.

- Machine learning learns from labeled data.

- Machine learning is the science of programming computers.

- Machine learning is the field of allowing robots to act

intelligently. - Machine learning is the field of study that gives computers the ability to learn without being explicitly programmed.

Question 1)

Consider the problem of predicting how well a student does in her second year of college/university, given how well they did in their first year.

Specifically, let x be equal to the number of “A” grades (including A- A and A+ grades) that a student receives in their first year of college (freshmen year). We would like to predict the value of y,

which we define as the number of “A” grades they get in their second year (sophomore year).

Refer to the following training set of a small sample of different students’ performances (note that this training set will also be referenced in other questions in this quiz). Here each row is one training example. Recall that in linear regression, our hypothesis is he(2) = 6, +012, and we use m to denote the number of training examples.

For the training set given above, what is the value of m? In the box below, please enter your answer (which should be a number between 0 and 10).

- ric: normal; vertical-align: baseline; white-space: pre-wrap;”>4

Question 2)

For this question, continue to assume that we are using the training set given above. Recall our definition of the cost function was

What is J(0,1)? In the box below, please enter your answer (use decimals instead of fractions if necessary, e.g. 1.5).

- ric: normal; vertical-align: baseline; white-space: pre-wrap;”>0.5

Question 3)

Suppose we set 00=0,01= 1.5. What is h0(2)?

- ric: normal; vertical-align: baseline; white-space: pre-wrap;”>3

Question 4)

In the given figure, the cost function J(00,01) has been plotted against O0 and 01, as shown in ‘Plot 2’. The contour plot for the same cost function is given in ‘Plot 1’. Based on the figure, choose the correct options (check all that apply).

- If we start from point B, gradient descent with a well-chosen learning rate will eventually help us reach at or near point A, as the value of cost function J(00,01) is maximum at point A.

- If we start from point B, gradient descent with a well-chosen learning rate will eventually help us reach at or near point A, as the value of cost function J(00,01) is minimum at

- ric: normal; vertical-align: baseline; white-space: pre-wrap;”>Point P (the global minimum of plot 2) corresponds to point A of Plot 1.

- If we start from point B, gradient descent with a well-chosen learning rate will eventually help us reach at or near point C, as the value of cost function J(00,01) is minimum at point C.

- Point P (The global minimum of plot 2) corresponds to point of Plot 1.

Question 5)

Suppose that for some linear regression problem (say, predicting housing prices as in the lecture), we have some training set, and for

our training set we managed to find some 00, 01, such that J(00,01)=0. Which of the statements below must then be true? (Check all that apply.)

- For this to be true, we must have y(i) = 0 for every value of i=1,2,…,m.

- For this to be true, we must have 00= 0 and 01= 0 so that h0(x)=0

- ric: normal; vertical-align: baseline; white-space: pre-wrap;”>Our training set can be fit perfectly by a straight line, i.e., all of our training examples lie perfectly on some straight line.

- Gradient descent is likely to get stuck at a local minimum and fail to find the global minimum.

Main Quiz 3

Feedback — III. Linear Algebra

Question 1)

Let two matrices be

What is A – B?

Answer is:

______________________________________________________________

Question 2)

What is 1/2 ✱ x ?

Answer is:

______________________________________________________________

Question 3)

Let u be a 3-dimensional vector, where specifically

What is uT?

Answer is:

______________________________________________________________

[Will update shortly]

Question 4)

Let u be a 3-dimensional vector, where specifically

What is uT v ?

(Hint: uT is a 1×3 dimensional matrix, and v can also be seen as a 3×1 matrix. The answer you want can be obtained by taking the matrix product of uT and v.)

Answer is :

- ight: bold; vertical-align: baseline; white-space: pre-wrap;”>10

______________________________________________________________

Question 5)

Let A and B be 3×3(square) matrices. Which of the following must necessarily hold true ?

- If C = A * B, then C is a 6×6 matrix.

- l-align: baseline; white-space: pre-wrap;”>If C = A * B, then C is a 3×3 matrix.

- l-align: baseline; white-space: pre-wrap;”>If v is a 3 dimensional vector, then A*B* v is a 3 dimensional vector.

- A * B = B*A

Machine Learning By Andrew Ng

Week 2 Quiz Answer

Question 1)

Suppose m=4 students have taken some class, and the class had a midterm exam and a final exam. You have collected a dataset of their scores on the two exams, which is as follows:

You’d like to use polynomial regression to predict a student’s final exam score from their midterm exam score. Concretely, suppose you want to fit a model of the form hθ (x) = θ1 + θ1 x1 + θ2 x2 , where x1 is the midterm score and x_2 is (midterm score) 2. Further, you plan to use both feature scaling (dividing by the “max-min”, or range, of a feature) and mean normalization.

What is the normalized feature x_2N{(4)}? (Hint: midterm = 89, final = 96 is training example 1.) Please round off your answer to two decimal places and enter in the text box below.

Answer:

; white-space: pre-wrap;”>-0.46

Question 2)

You run gradient descent for 15 iterations with a = 0.3 and compute JO) after each iteration. You find that the value of J(@) increases over time. Based on this, which of the following conclusions seems most plausible?

- a=0.3 is an effective choice of learning rate.

- Rather than use the current value of a, it’d be more promising to try a larger value of a (say a= 1.0).

- ; white-space: pre-wrap;”>Rather than use the current value of a, it’d be more promising to try a smaller value of a (say a=0.1).

Question 3)

Suppose you have m = 14 training examples with n=3 features (excluding the additional all-ones feature for the intercept term, which you should add). The normal equation is θ = (XT X)-1. X-1 y. For the given values of m and n, what are the dimensions of θ, X, and y in this equation?

- X is 14 x 3, y is 14 x 1,0 is 3 x 3

- X is 14 x 4, y is 14 x 4,0 is 4 x 4

- X is 14 x 3, y is 14 x 1,0 s 3 x 1

- ; white-space: pre-wrap;”>X is 14 x 4, y is 14 x 1,0 is 4 x 1

Question 4)

Suppose you have a dataset with m= 1000000 examples and n = 200000 features for each example. You want to use multivariate linear regression to fit the parameters θ to our data. Should you prefer gradient descent or the normal equation?

- Gradient descent, since (XT X)-1 will be very slow to compute in the normal equation.

- The normal equation, since it provides an efficient way to directly find the solution.

- Gradient descent, since it will always converge to the optimal θ.

- The normal equation, since gradient descent might be unable to find the optimal θ.

Question 5)

Which of the following are reasons for using feature scaling?

- It is necessary to prevent the normal equation from getting stuck in local optima

- It speeds up gradient descent by making each iteration of gradient descent less expensive to compute.

- ; white-space: pre-wrap;”>It speeds up gradient descent by making it require fewer iterations to get to a good solution.

- It prevents the matrix XT X (used in the normal equation) from being non-invertable (singular/degenerate).

Suppose m=4 students have taken some class, and the class had a midterm exam and a final exam. You have collected a dataset of their scores on the two exams, which is as follows:

You’d like to use polynomial regression to predict a student’s final exam score from their midterm exam score. Concretely, suppose you want to fit a model of the form hθ (x) = θ1 + θ1 x1 + θ2 x2 , where I is the midterm score and x2 is (midterm score)2. Further, you plan to use both feature scaling (dividing by the “max-min”, or range, of a feature) and mean normalization.

What is the normalized feature 2 ? (Hint: midterm – 89, final – 96 is training example 1.) Please round off your answer to two decimal places and enter in the text box below.

Answer:

; white-space: pre-wrap;”>0.32

Question 7)

You run gradient descent for 15 iterations with a = 0.3 and compute J(θ) after each iteration. You find that the value of J(θ) decreases slowly and is still decreasing after 15 iterations. Based on this, which of the following conclusions seems most plausible?

- Rather than use the current value of a, it’d be more promising to try a smaller value of a (saya=0.1).

- ; white-space: pre-wrap;”>Rather than use the current value of a, it’d be more promising to try a larger value of a (say a = 1.0).

- a=0.3 is an effective choice of learning rate.

Question 8)

Suppose you have m = 23 training examples with n=5 features (excluding the additional allones feature for the intercept term, which you should add). The normal equation is θ = (XT X)-1. X-T y.. For the given values of m and n, what are the dimensions of θ, X, and y in this equation?

- X is 23 x 8. y is 23 x 6,is 6 x 6

- X is 23 x 5 y is 23 x 1,0 is 5 x 5

- ; white-space: pre-wrap;”>X is 23 x 6 y is 23 x 1,8 is 6 x 1

- X is 23 x 5, y is 23 x 1,0 is 5 x 1

Question 1)

Suppose I first execute the following Octave commands:

A = [1 2; 3 4; 5 6];

B = [1 2 3; 4 5 6];

Which of the following are then valid Octave commands? Check all that apply and assume all options are written in an Octave command. (Hint: A denotes the transpose of A.)

- l-align: baseline; white-space: pre-wrap;”>C = A’ +B;

- l-align: baseline; white-space: pre-wrap;”>C=B*A;

- C A +B;

- C = B’ A;

Question 2)

Question text

- l-align: baseline; white-space: pre-wrap;”>B = A(:, 1:2);

- l-align: baseline; white-space: pre-wrap;”>B = A(1:4, 1:2);

- B = A(0:2, 0:4)

- B = A(1:2, 1:4);

Question 3)

Let A be a 10×10 matrix andą be a 10-element vector. Your friend wants to compute the product Ac and writes the following code:

v = zeros (10, 1);

for i = 1:12

for j = 1:10

v(i) = v(i) + A(i, j) * x(j);

end

end

How would you vectorize this code to run without any for loops? Check all that apply.

- l-align: baseline; white-space: pre-wrap;”>v=A*x;

- v=Ax;

- v=x’* A

- v = sum (A * x);

Question 4)

Say you have two column vectors v and w, each with 7 elements (i.e., they have dimensions 7×1). Consider the following code:

z = 0;

for i = 1:7

z = z + v(i) * w(i)

end

Which of the following vectorizations correctly compute z? Check all that apply.

- l-align: baseline; white-space: pre-wrap;”>z = sum (v.*w);

- l-align: baseline; white-space: pre-wrap;”>z=sum(v.*w);

- z=v’*w;

- z = v.* w;

Question 5)

In Octave, many functions work on single numbers, vectors, and matrices. For example, the sin function when applied to a matrix will return a new matrix with the sin of each element. But you have to be careful, as certain functions have different behavior. Suppose you have an 7×7 matrix X. You want to compute the log of every element, the square of every element, add 1 to every element, and divide every element by 4. You will store the results in four matrices, A, B,C,D. One way to do so is the following code:

for i = 1:7

for j = 1:7

A(i, j) = log(x(i, j));

B(i, j) = X(i, i)^2;

Cli, j) = X(i, j) + 1;

D(i, j) = X(i, j) / 4;

end

end

Which of the following correctly compute A,B,C, or D? Check all that apply.

- l-align: baseline; white-space: pre-wrap;”>C = X + 1;

- l-align: baseline; white-space: pre-wrap;”>D = X/4;

- l-align: baseline; white-space: pre-wrap;”>B =X.^2;

B = X^2;

Machine Learning Andrew Ng

Week 3 Quiz Answer

In this article i am gone to share Coursera Course Machine Learning Andrew Ng Week 3 Quiz Answer with you..

Question 1)

Suppose that you have trained a logistic regression classifier, and it outputs on a new example x a prediction hθ (x) = 0.4. This means (check all that apply);

- Our estimate for P(y=0|x;θ) is 0.4.

- Our estimate for P(y–0|x;θ) is 0.6.

- Our estimate for P(y=1|x;θ) is 0.4.

- Our estimate for P(y=1|x;θ) is 0.6.

Question 2)

Suppose you have the following training set, and fit a logistic regression classifier hθ (x) = g

(θ0 + θ1 x1 + θ2 x2).

Which of the following are true? Check all that apply.

.

Question 3)

Question 4)

Which of the following statements are true? Check all that apply.

- The sigmoid function g(x) =1/+-e2 is never greater than one (> 1).

- The cost function J(θ) for logistic regression trained with m > 1 examples is always greater than or equal to zero.

- Linear regression always works well for classification if you classify hy using a threshold on the prediction made by linear regression.

- For logistic regression, sometimes gradient descent will converge to a local minimum (and fail to find the global minimum). This is the reason we prefer more advanced optimization algorithms such as fminunc (conjugate gradient/BFGS/L-BFGS/etc).

Question 5)

Answer:

Quiz 2)

Question 1)

You are training a classification model with logistic regression. Which of the following statements are true? Check all that apply.

- Adding many new features to the model helps prevent overfitting on the training set.

- Introducing regularization to the model always results in equal or better performance on the training set.

- Adding a new feature to the model always results in equal or better performance on the training set.

- Introducing regularization to the model always results in equal or better performance on examples not in the training set.

Question 2)

Question3)

Which of the following statements about regularization are true? Check all that apply.

Using too large a value λ of can cause your hypothesis to underfit the data

Because regularization causes J(O) to no longer be convex, gradient descent may not always converge to the global minimum (when I > 0, and when using an appropriate learning rate c).

Because logistic regression outputs values 0 < 1, it’s range of output values can only be “shrunk” slightly by regularization anyway, so regularization is generally not helpful for it.

Using a very large value of cannot hurt the performance of your hypothesis; the only reason we do not set to be too large is to avoid numerical problems.

Question 4)

Question 5)

Week 4 Quiz Answer

Question 1)

Which of the following statements are true? Check all that apply.

- If a neural network is overfitting the data, one solution would be to decrease the regularization parameter 1.

- Suppose you have a multi-class classification problem with three classes, trained with a 3 layer network. Let a = (he(2), be the activation of the first output unit, and similarly a = (he(2))and a = (he()). Then for any input I, it must be the case that a +a +a = 1.

- ; white-space: pre-wrap;”>If a neural network is overfitting the data, one solution would be to increase the regularization parameter

- ; white-space: pre-wrap;”>In a neural network with many layers, we think of each successive layer as being able to use the earlier layers as features, so as to be able to compute increasingly complex functions.

Question 2)

Consider the following neural network which takes two binary-valued inputs I1, I2 = {0,1} and outputs he(2). Which of the following logical functions does it approximately) compute?

- ; white-space: pre-wrap;”>NAND (meaning “NOT AND”)

- AND

- XOR(exclusive OR)

- OR

Question 3)

Question 4)

You have the following neural network:

You’d like to compute the activations of the hidden layer a) ER . One way to do so is the following Octave code:

% Thetal is Theta with superscript “(1)” from lecture

% ie, the matrix of parameters for the mapping from layer 1 (input) to layer 2

% Thetal has size 3×3 % Assume ‘sigmoid’ is a built-in function to compute 1/ (1 +exp(-2))

a2 = zeros (3, 1);

for i – 1:3

for j = 1:3

a2(i) = a (1) + x(j) – Thetalci, 1);

end

a2(i) = sigmoid (a2(1));

end

You want to have a vectorized implementation of this (i.e., one that does not use for loops). Which of the following implementations correctly compute a’!? Check all that apply.

- ; white-space: pre-wrap;”>a2 = sigmoid (Theta1 * x);

- a2 – sigmoid (* * Thetal);

- a2 = sigmoid (Theta2 * x)

- z = sigmoid(x); a2 = Theta1 * z;

Week 5 Quiz Answer

Question 1)

Question 2)

Suppose Theta l is a 5×3 matrix, and Theta2 is a 4×6 matrix. You set thetavec – [Thetai(:); Theta2(:)]. Which of the following correctly recovers Theta2?

- ; white-space: pre-wrap;”>reshape(thetavec (16 : 39), 4,6)

- reshape(thetavec (15: 38), 4, 6)

- reshape(thetavec(16: 24),4,5)

- reshape(thetavec (15: 39), 4, 6)

- reshape(thetaVec(16 : 39), 6, 4)

Question 3)

Question 4)

Which of the following statements are true? Check all that apply.

- ; white-space: pre-wrap;”>Using gradient checking can help verify if one’s implementation of backpropagation is bug-free.

- Gradient checking is useful if we are using one of the advanced optimization methods (such as in fminunc) as our optimization algorithm. However, it serves little purpose if we are using gradient descent.

- ; white-space: pre-wrap;”>For computational efficiency, after we have performed gradient checking to verify that our backpropagation code is correct, we usually disable gradient checking before using backpropagation to train the network.

- Computing the gradient of the cost function in a neural network has the same efficiency when we use backpropagation or when we numerically compute it using the method of gradient checking,

Question 5)

Which of the following statements are true? Check all that apply.

- Suppose we have a correct implementation of backpropagation, and are training a neural network using gradient descent. Suppose we plot J(θ) as a function of the number of iterations, and find that it is increasing rather than decreasing. One possible cause of this is that the learning rate a is too large.

- If we are training a neural network using gradient descent, one reasonable “debugging” step to make sure it is working is to plot J(θ) as a function of the number of iterations, and make sure it is decreasing (or at least non-increasing) after each iteration.

- Suppose that the parameter θ(1) is a square matrix (meaning the number of rows equals the number of columns). If we replace θ(1) with its transpose (θ(1))T,’then we have not changed the function that the network is computing.

- Suppose we are using gradient descent with learning rate a. For logistic regression and linear regression, J(θ) was a convex optimization problem and thus we did not want to choose a learning rate a that is too large. For a neural network however, J(θ) may not be convex, and thus choosing a very large value of a can only speed up convergence.

Week 6 Quiz Answer

- Neither

- High variance

- High bias

- Get more training examples

- Use fewer training examples.

- Try adding polynomial features.

- Try using a smaller set of features.

- Use fewer training examples.

- Try adding polynomial features

- Try evaluating the hypothesis on a cross validation set rather than the test set

- Try decreasing the regularization parameter

- Suppose you are training a logistic regression classifier using polynomial features and want to select what degree polynomial (denotedd in the lecture videos) to use. After training the classifier on the entire training set, you decide to use a subset of the training examples as a validation set. This will work just as well as having a validation set that is separate (disjoint) from the training set.

- A typical split of a dataset into training, validation and test sets might be 60% training set, 20% validation set, and 20% test set.

- Suppose you are using linear regression to predict housing prices, and your dataset comes sorted in order of increasing sizes of houses. It is then important to randomly shuffle the dataset before splitting it into training, validation and test sets, so that we don’t have all the smallest houses going into the training set and all the largest houses going into the test set

- It is okay to use data from the test set to choose the regularization parameter but not the model parameters (8).

- A model with more parameters is more prone to overfitting and typically has higher variance.

- If a learning algorithm is suffering from high bias, only adding more training examples may not improve the test error significantly.

- When debugging learning algorithms, it is useful to plot a learning curve to understand if there is a high bias or high variance problem.

- If a neural network has much lower training error than test error, then adding more layers will help bring the test error down because we can fit the test set better.

Question 1)

You are working on a spam classification system using regularized logistic regression. “Spam” is a positive class (y = 1) and “not spam” is the negative class (y = 0). You have trained your classifier and there are m= 1000 examples in the cross-validation set. The chart of predicted class vs. actual class is:

For reference:

- Accuracy = (true positives + true negatives)/(total examples)

- Precision = (true positives)/(true positives + false positives)

- Recall – true positives)/(true positives + false negatives)

- Fy score = 12 precision * recall)/(precision + recall)

What is the classifier’s F1 score (as a value from 0 to 1)?

Enter your answer in the box below. If necessary, provide at least two values after the decimal point.

Answer:

; white-space: pre-wrap;”>0.157

Question 2)

Suppose a massive dataset is available for training a learning algorithm. Training on a lot of data is likely to give good performance when two of the following conditions hold true.

Which are the two?

- We train a model that does not use regularization

- We train a learning algorithm with a small number of parameters (that is thus unlikely to overfit).

- ; white-space: pre-wrap;”>We train a learning algorithm with a large number of parameters (that is able to learn/represent fairly complex functions).

- ; white-space: pre-wrap;”>The features 3 contain sufficient information to predict y accurately. (For example, one way to verify this is if a human expert on the domain can confidently predict y when given only 2).

Question 3)

Suppose you have trained a logistic regression classifier which is outputing hθ (x).

Currently, you predict 1 if hθ (x) > threshold, and predict 0 if hθ (x) <threshold, where currently the threshold is set to 0.5.

Suppose you increase the threshold to 0.9. Which of the following are true? Check all that apply.

- The classifier is likely to now have lower precision.

- ; white-space: pre-wrap;”>The classifier is likely to now have lower recall

- The classifier is likely to have unchanged precision and recall, but higher accuracy.

- The classifier is likely to have unchanged precision and recall, but lower accuracy

Question 4)

Which of the following statements are true? Check all that apply.

- If your model is underfitting the training set, then ohtaining more data is likely to help.

- It is a good idea to spend a lot of time collecting a large amount of data before building your first version of a learning algorithm.

- After training a logistic regression classifier, you must use 0.5 as your threshold for predicting whether an example is positive or negative

- ; white-space: pre-wrap;”>Using a very large training set makes it unlikely for model to overfit the training data.

- white-space: pre-wrap;”>On skewed datasets (e.g., when there are more positive examples than negative examples), accuracy is not a good measure of performance and you should instead use F1 score based on the precision and recall.

Machine Learning Andrew NgWeek 7 Quiz Answer

Quiz 1)

Question 1)

Suppose you have trained an SVM classifier with a Gaussian kernel, and it learned the following decision boundary on the training set:

When you measure the SVM’s performance on a cross validation set it does poorly. Should you try increasing or decreasing C? Increasing or decreasing σ2?

- It would be reasonable to try decreasing C. It would also be reasonable to try decreasing σ2

- It would be reasonable to try decreasing C. It would also be reasonable to try increasing σ2

- It would be reasonable to try increasing C. It would also be reasonable to try decreasing σ2

- It would be reasonable to try increasing C. It would also be reasonable to try increasing σ2

Question 2)Question 3)Question 4)

Suppose you have a dataset with n=10 features and m = 5000 examples. After training your logistic regression classifier with gradient descent you find that it has underfit the training set and does not achieve the desired performance on the training or Cross validation sets.

Which of the following might he promising steps to take? Check all that apply

- Use an SVM with a Gaussian Kernel.

- ; white-space: pre-wrap;”>Create / add new polynomial features.

- Increase the regularization parameter λ.

- Use an SVM with a linear kernel, without introducing new features

Question 5)

Which of the following statements are true? Check all that apply.

- ; white-space: pre-wrap;”>It is important to perform feature normalization before using the Gaussian Kernel.

- Suppose you are using SVMs to do multi-class classification and would like to use the one-vs-all approach. If you have K different classes, you will train K – 1 different SVMs.

- If the data are linearly separable, an SVM using a linear kernel will return the same parameters θ regardless of the chosen value of C (i.e., the resulting value θ of does not depend on C).

- The maximum value of the Gaussian kernel (i.e., sim(x,l(1))) is 1.

Week 8 Quiz Answer

Question 1)For which of the following tasks might K-means clustering be a suitable algorithm? Select all that apply.

- Given many emails you want to determine if they are Spam or Non-Spam emails

- ; white-space: pre-wrap;”>Given a set of news articles from many different news websites, find out what are the main topics covered.

- Given historical weather records, predict if tomorrow’s weather will be sunny or rainy.

- ; white-space: pre-wrap;”>From the user usage patterns on a website, figure out what different groups of users exist.

Question 2)

- c(i) = 2

- c(i) = 1

- c(i) = is not assigned

- c(i) = 3

Question 3)

Kmeans is an iterative algorithm, and two of the following steps are repeatedly carried out in its inner-loop. Which two?

- The cluster centroid assignment step, where each duster centroid (by setting to the closest training example x4

- ; white-space: pre-wrap;”>Move each cluster centroid , by setting it to be equal to the closest training example 20

- The cluster assignment step, where the parameters c(i) are updated.

- Move the duster centroids, where the centroids μk Hare updated.

Question 4)

Suppose you have an unlabeled dataset { x(2),…,x(m)}. You run K-means with 50 different random.

Initializations, and obtain 50 different clusterings of the data. What is the recommended way for chocsing which one of these 50 clusterings to use?

- Use the elbow method.

- Manually examine the clusterings, and pick the best one.

- Plot the data and the cluster centroids, and pick the clustering that gives the most “coherent cluster centroids.

- Compute the distortion function J(c(1) ….., c(m), μ1,…..,μk),cm), and pick the one that minimizes this.

Question 5)

Which of the following statements are true? Select all that apply.

- The standard way of initializing K-means is setting μ1 =….. = μk to be equal vector of zeros.

- Since K-Means is an unsupervised learning algorithm. I cannot overfit the data, and thus it is always better to have as large a number of clusters as is computationally feasible.

- ; white-space: pre-wrap;”>If we are worried about K-means getting stuck in bad local optima, one way to amel orate (reduce) this problem is if we try using multiple random initializations.

- ; white-space: pre-wrap;”>For some datasets, the “right or correct’ value of Kithe number of clusters) can be ambipuous, and hard even for a human expert looking carefully at the data to decide

Quiz 2

Question 1)

consider the following 2D dataset:

Which of the following figures correspond to possible values that PCA may return for u(1)) (the first eigenvector / first principal component)? Check all that apply (you may have to check more than one figure).

Answer:Question 2)

Which of the following is a reasonable way to select the number of principal components k?

(Recall that n is the dimensionality of the input data and m is the number of input examples.)

- Choose k to be the largest value so that at least 99% of the variance is retained

- Use the elbow method.

- Choose k to be the smallest value so that at least 99% of the variance is retained.

- Choose k to be 99% of m (i.e., k = 0.99 * m, rounded to the nearest integer).

Question 3)

Suppose someone tells you that they ran PCA in such a way that “95% of the variance was retained.” What is an equivalent statement to this?

Question 4)

Which of the following statements are true? Check all that apply.

- Feature scaling is not useful for PCA, since the eigenvector calculation (such as using Octave’s svd (Sigma) routine) takes care of this automatically.

- Given an input x ∈ Rn, PCA compresses it to a lower-dimensional vector z ∈ Rk.

- PCA can be used only to reduce the dimensionality of data by 1 (such as 3D to 2D, or 2D to 1D).

- ; white-space: pre-wrap;”>If the input features are on very different scales, it is a good idea to perform feature scaling before applying PCA.

Question 5)

Which of the following are recommended applications of PCA? Select all that apply.

- To get more features to feed into a learning algorithm.

- Preventing overfitting: Reduce the number of features in a supervised learning problem), so that there are fewer parameters to learn

- ; white-space: pre-wrap;”>Data visualization: Reduce data to 2D (or 3D) so that it can be plotted.

- ; white-space: pre-wrap;”>Data compression: Reduce the dimension of your data, so that it takes up less memory / disk space.

Week 9 Quiz Answer

Question 1)For which of the following problems would anomaly detection be a suitable algorithm?

- Given data from credit card transactions, classify each transaction according to type of purchase (for example: food, transportation, clothing).

- Given an image of a face, determine whether or not it is the face of a particular famous individual.

- ; white-space: pre-wrap;”>From a large set of primary care patient records, identify individuals who might have unusual health conditions.

- ; white-space: pre-wrap;”>Given a dataset of credit card transactions, identify unusual transactions to flag them as possibly fraudulent.

Question 2)

Suppose you have trained an anomaly detection system that flags anomalies when pris less than E, and you find on the cross-validation set that it has too many false positives (flagging too many things as anomalies). What should you do?

- ; white-space: pre-wrap;”>Decrease e

- Increase e

Question 3)

Suppose you are developing an anomaly detection system to catch manufacturing defects in airplane engines. You model uses

You have two features 2 = vibration intensity, and T2 = heat generated. Both 2 and 22 take on values between 0 and 1 (and are strictly greater than 0), and for most “normal” engines you expect that 21 12. One of the suspected anomalies is that a flawed engine may vibrate very intensely even without generating much heat (large 21, small 22), even though the particular values of 21 and 22 may not fall outside their typical ranges of values. What additional feature Iz should you create to capture these types of anomalies:

Answer

Question 4)

Which of the following are true? Check all that apply.

- If you are developing an anomaly detection system, there is no way to make use of labeled data to improve your system.

- If you have a large labeled training set with many positive examples and many negative examples, the anomaly detection algorithm will likely perform just as well as a supervised learning algorithm such as an SVM.

- ; white-space: pre-wrap;”>When choosing features for an anomaly detection system, it is a good idea to look for features that take on unusually large or small values for (mainly the) anomalous examples.

- ; white-space: pre-wrap;”>If you do not have any labeled data (or if all your data has label y=0), then is is still possible to learn p(x), but it may be harder to evaluate the system or choose a good value of €.

Question 5)

You have a 1-D dataset {x(1),…,x(m)) } and you want to detect outliers in the dataset. You first plot the dataset and it looks like this:

Answer

__Quiz 2)

Question 1)

Suppose you run a bookstore, and have ratings (1 to 5 stars) of books. Your collaborative filtering algorithm has learned a parameter vector )) for user j, and a feature vector for each book. You would like to compute the “training error”, meaning the average squared error of your system’s predictions on all the ratings that you have gotten from your users. Which of these are correct ways of doing so (check all that apply)?

For this problem, let m be the total number of ratings you have gotten from your users. (Another way of saying this is that m

[Hint: Two of the four options below are correct.]

Answer

Question 2)

In which of the following situations will a collaborative filtering system be the most appropriate learning algorithm (compared to linear or logistic regression)?

- ; white-space: pre-wrap;”>You own a clothing store that sells many styles and brands of jeans. You have collected reviews of the different styles and brands from frequent shoppers, and you want to use these reviews to offer those shoppers discounts on the jeans you think they are most likely to purchase

- You manage an online bookstore and you have the book ratings from many users. You want to learn to predict the expected sales volume (number of books sold) as a function of the average rating of a book

- You’re an artist and hand-paint portraits for your clients. Each client gets a different portrait (of themselves) and gives you 1-5 star rating feedback, and each client purchases at most 1 portrait. You’d like to predict what rating your next customer will give you.

- ; white-space: pre-wrap;”>You run an online bookstore and collect the ratings of many users. You want to use this to identify what books are similar to each other (ie, if one user likes a certain book, what are other books that she might also like?)

Question 3)

You run a movie empire, and want to build a movie recommendation system based on collaborative filtering. There were three popular review websites (which we’ll call A, B and C) which users to go to rate movies, and you have just acquired all three companies that run these websites. You’d like to merge the three companies’ datasets together to build a single/unified system. On website A, users rank a movie as having 1 through 5 stars. On website B, users rank on a scale of 1-10, and decimal values ieg., 7.5) are allowed. On website C, the ratings are from 1 to 100. You also have enough information to identify users/movies on one website with users/movies on a different website. Which of the following statements is true?

- You can combine all three training sets into one as long as your perform mean normalization and feature scaling after you merge the data.

- You can combine all three training sets into one without any modification and expect high performance from a recommendation system.

- ; white-space: pre-wrap;”>You can merge the three datasets into one, but you should first normalize each dataset separately by subtracting the mean and then dividing by (max – min) where the max and min (5-1) or (10-1) or (100-1) for the three websites respectively.

- It is not possible to combine these websites’ data. You must build three separate recommendation systems.

Question 4)

Which of the following are true of collaborative filtering systems? Check all that apply.

- To use collaborative filtering, you need to manually design a feature vector for every item (e.g. movie) in your dataset, that describes that item’s most important properties.

- ; white-space: pre-wrap;”>If you have a dataset of users ratings’ on some products, you can use these to predict one user’s preferences on products he has not rated.

- When using gradient descent to train a collaborative filtering system, it is okay to initialize all the parameters (x(i) and θ(j)) to zero.

Question 5)

Suppose you have two matrices A and B where A is 5×3 and B is 3×5. Their product is C=AB, a 5×5 matrix. Furthermore, you have a 5×5 matrix R where every entry is 0 or 1. You want to find the sum of all elements C(i,j) for which the corresponding R(i,j) is 1, and ignore all elements C(i,j) where R(i,j) = 0. One way to do so is the following code:

C = A* B;

total = 0;

for i = 1:5

for j = 1:5

if (R(i, j) == 1)

total = total + c(i, j);

end

end

end

Which of the following pieces of Octave code will also correctly compute this total? Check all that apply. Assume all options are in code.

- ; white-space: pre-wrap;”>total = sum(sum(A*B).* R))

- ; white-space: pre-wrap;”>C = (A *B).* R; total = sum(C:));

- total = sum(sum((A + B) * R));

- C= (A + B) * R; total = sum(C(:));

Week 10 Quiz Answer

Question 1)

Suppose you are training a logistic regression classifier using stochastic gradient descent. You find that the cost (say, cost(θ, (x(i),y(i) ), averaged over the last 500 examples), plotted as a function of the number of iterations, is slowly increasing over time. Which of the following changes are likely to help?

- ; white-space: pre-wrap;”>Try halving (decreasing) the learning rate a, and see if that causes the cost to now consistently go down; and if not, keep halving it until it does.

- Try averaging the cost over a smaller number of examples (say 250 examples instead of 500) in the plot.

- This is not possible with stochastic gradient descent, as it is guaranteed to converge to the optimal parameters θ.

- Use fewer examples from your training set.

Question 2)

Which of the following statements about stochastic gradient descent are true? Check all that apply.

- In order to make sure stochastic gradient descent is converging, we typically compute Jtrain (θ) after each iteration (and plot it) in order to make sure that the cost function is generally decreasing.

- You can use the method of numerical gradient checking to verify that your stochastic gradient descent implementation is bug-free. (One step of stochastic gradient descent computes the partial derivative cost d/dx cost(θ, (x(i),y(i) )),)

- ; white-space: pre-wrap;”>Before running stochastic gradient descent, you should randomly shuffle (reorder) the training set.

- Suppose you are using stochastic gradient descent to train a linear regression classifier. The cost function J(0) = m (ho(z) – y)2 is guaranteed to decrease after every iteration of the stochastic gradient descent algorithm.

Question 3)

Which of the following statements about online learning are true? Check all that apply.

- Online learning algorithms are most appropriate when we have a fixed training set of size m that we want to train on.

- ; white-space: pre-wrap;”>Online learning algorithms are usually best suited to problems were we have a continuous/non-stop stream of data that we want to learn from.

- When using online learning, you must save every new training example you get, as you will need to reuse past examples to re-train the model even after you get new training examples in the future.

- ; white-space: pre-wrap;”>One of the advantages of online learning is that if the function we’re modeling changes over time (such as if we are modeling the probability of users clicking on different URLs, and user tastes/preferences are changing over time), the online learning algorithm will automatically adapt to these changes.

Question 4)

Assuming that you have a very large training set, which of the following algorithms do you think can be parallelized using map-reduce and splitting the training set across different machines? Check all that apply.

- Linear regression trained using stochastic gradient descent.

Computing the average of all the features in your training set

order to perform mean normalization).

- Since stochastic gradient descent processes one example at a time and updates the parameter values after each, it cannot be easily parallelized.

- ; white-space: pre-wrap;”>Logistic regression trained using batch gradient descent.

Question 5)

Which of the following statements about map-reduce are true? Check all that apply.

- ; white-space: pre-wrap;”>If you have only 1 computer with 1 computing core, then map-reduce is unlikely to help.

- Linear regression and logistic regression can be parallelized using map-reduce, but not neural network training

- ; white-space: pre-wrap;”>Because of network latency and other overhead associated with map-reduce, if we run map-reduce using N computers, we might get less than an N-fold speedup compared to using 1 computer.

- ; white-space: pre-wrap;”>; white-space: pre-wrap;”>When using map-reduce with gradient descent, we usually use a single machine that accumulates the gradients from each of the map-reduce machines, in order to compute the parameter update for that iteration.

Week 11 Quiz Answer

Question 1)Suppose you are running a sliding window detector to find text in images. Your input images are 1000×1000 pixels. You will run your sliding windows detector at two scales, 10×10 and 20×20 (i.e, you will run your classifier on lots of 10×10 patches to decide if they contain text or not; and also on lots of 20×20 patches), and you will “step” your detector by 2 pixels each time. About how many times will you end up running your classifier on a single 1000×1000 test set image?- 500,000

- 100,000

- 1,000,000

- 250,000

Question 2)Suppose that you just joined a product team that has been developing a machine learning application, using m= 1,000 training examples. You discover that you have the option of hiring additional personnel to help collect and label data. You estimate that you would have to pay each of the labellers $10 per hour, and that each labeller can label 4 examples per minute. About how much will it cost to hire labellers to label 10,000 new training examples?- $150

- $10000

- $600

- $400

Question 3)What are the benefits of performing a ceiling analysis? Check all that apply.- It helps us decide on allocation of resources in terms of which component in a machine learning pipeline to spend more effort on.

- It can help indicate that certain components of a system might not be worth a significant amount of work improving, because even if it had perfect performance its impact on the overall system may be small.

- If we have a low-performing component, the ceiling analysis can tell us if that component has a high bias problem or a high variance problem.

- It is a way of providing additional training data to the algorithm.

Question 4)Suppose you are building an object classifier, that takes as input an image, and recognizes that image as either containing a car (y=1) or not (y=0). For example, here are a positive example and a negative example:

classifier After carefully analyzing the performance of your algorithm, you conclude that you need more positive (y=1) training examples. Which of the following might be a good way to get additional positive examples?- Mirror your training images across the vertical axis (so that a left-facing car now becomes a right-facing one).

- Take a few images from your training set, and add random, gaussian noise to every pixel.

- Take a training example and set a random subset of its pixel to 0 to generate a new example.

- Select two car images and average them to make a third example.

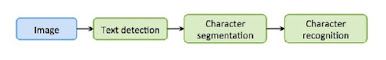

Question 5)Suppose you have a PhotoOCR system, where you have the following pipeline:You have decided to perform a ceiling analysis on this system, and find the following:

Photo OCR Which of the following statements are true?- The potential benefit to having a significantly improved text detection system is small, and thus it may not be worth significant effort trying to improve it.

- If we conclude that the character recognition’s errors are mostly due to the character recognition system having high variance, then it may be worth significant effort obtaining additional training data for character recognition

- We should dedicate significant effort to collecting additional training data for the text detection system.

- The most promising component to work on is the text detection system, since it has the lowest performance (72%) and thus the biggest potential gain.